in Japanese

Introduction

In

the previous page, a scene recognition (15-class classification) was performed by using Chainer. In this page,

a Fully Convolutional Network (FCN) is simplified and implemented by means of Chainer.

Computation Environment

The same instance as the previous page, g2.2xlarge in the Amazon EC2, is used.

Dataset

In this work an FCN is trained on a dataset

VOC2012 which includes ground truth segmentations. The examples are given below:

The number of images is 2913. I divided them by the split ratio 4:1. The former set of images corresponds to a training dataset, and the latter a testing one.

| number of train |

number of test |

| 2330 |

580 |

The number of the training (testing) images is rounded off to make it a multiple of 10 (5). 10 (5) is a mini batch size for the training (testing) images. Though

the original FCN operates on an input of any size, for simplicity the FCN in this work takes a fixed-sized (224$\times$224) input. Therefore all images are resized in advance as shown below:

I cropped a maximum square centered on an image and resized it to 224$\times$224.

VOC2012 provides 21 classes as specified below:

| label |

category |

| 0 |

background |

1 |

aeroplane |

2 |

bicycle |

3 |

bird |

4 |

boat |

5 |

bottle |

6 |

bus |

7 |

car |

8 |

cat |

9 |

chair |

10 |

cow |

11 |

diningtable |

12 |

dog |

13 |

horse |

14 |

motorbike |

15 |

person |

16 |

potted plant |

17 |

sheep |

18 |

sofa |

19 |

train |

20 |

tv/monitor |

Network Structure

The detailed structure of a network used in this work is as follows:

In

the original FCN, there are also some layers that follow the layer

pool5. For simplicity, these layers are removed in this work.

- name: a layer name

- input: a size of an input feature map

- in_channels: the number of input feature maps

- out_channels: the number of output feature maps

- ksize: a kernel size

- stride: a stride size

- pad: a padding size

- output: a size of an output feature map

pool3,

pool4, and

pool5 are followed by

score-pool3,

score-pool4, and

score-pool5, respectively. Their parameters are shown below:

I call their (

score-pool3,

score-pool4, and

score-pool5) outputs

p3,

p4, and

p5.

The layers,

upsampled_pool4 and

upsampled_pool5 shown in the table above, are applied to

p4 and

p5, respectively. After summing their (

upsample_pool4 and

upsample_pool5) outputs and

p3, the sum is upsampled back to the original image size through the layer

upsample_final shown in the table. This net structure corresponds to the FCN-8s described in

the original FCN.

Implementation of Network

The network can be written in Chainer like this:

-- myfcn.py --

I assigned pixels on the borderline between objects and a background with a label of -1. To do so leads to no contribution from those pixels when calculating

softmax_cross_entropy (see

the Chainer's specification for details). The function

calculate_accuracy also throws away contribution from the pixels on the borderline. The function

add is defined as:

-- add.py --

Training

The script to train is as follows:

-- train.py --

-- mini_batch_loader.py --

I used

copy_model described in

the page. The file

VGGNet.py was downloaded from

the page. The procedures expressed in the script

train.py are as follows:

- make an instance of the type

MiniBatchLoader

- make an instance of the type

VGGNet

- make an instance of the type

MyFcn

- copy parameters of the

VGGNet instance to those of MyFcn one

- select MomentumSGD as an optimization algorithm

- run a loop to train the net

To load all the training data on the GPU memory at a time causes the error "cudaErrorMemoryAllocation: out of memory" to occur. Therefore, only the minibatch-size data is loaded every time the training procedure requires it.

Results

Iterations are terminated at the 62nd epoch. The accuracies and the losses for the training and testing datasets are shown below.

-- Training Dataset --

-- Testing Dataset --

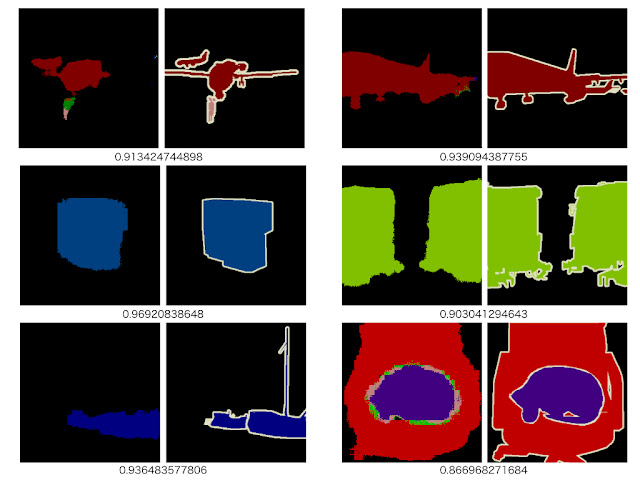

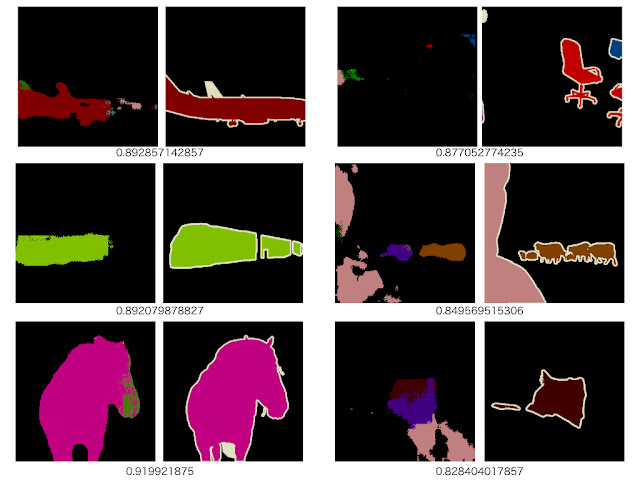

The accuracy is defined as the average percentage of those pixels per image, which have labels classified correctly, over the training or the testing dataset. The behavior of the accuracy and the loss for the training dataset is what we would expect it to be, while for the testing dataset the loss curve surges at some point. It seems to indicate overfitting. The final accuracies are 99% for the training dataset and 82% for the testing dataset. A sample set of an input image, a predicted segmentation, and a ground truth is shown with the accuracy below:

Other samples of predicted and groundtruth images are given below:

-- Training Dataset --

-- Testing Dataset --

Because the accuracy benefits from the background pixels, I think that in order to get satisfied results the accuracy has to achieve over 90%.