Introduction

A method for 3D object recognition, which is based on the work by H. Su et al., has been already proposed and evaluated on two 3D object datasets of the ModelNet10 and the ModelNet40. The classification accuracy for the ModelNet10 dataset has been shown here and that for the ModelNet40 dataset here. The method makes use of a convolutional neural network (CNN) as both a feature extractor and a classifier. In this page the method is extended to apply the CNN as only a feature extractor and to perform a classification by means of a support vector machine (SVM). It is demonstrated that the classification accuracy of the method is a little higher than those of the previous methods.

Classification Accuracies

The following table shows classification accuracies for the previous and the proposed methods. The previous results are quoted from the Princeton ModelNet page.

| Algorithm | Accuracy(ModelNet10) | Accuracy(ModelNet40) |

|---|---|---|

| MVCNN | 90.1% | |

| VoxNet | 92% | 80.3% |

| DeepPano | 85.45% | 77.63% |

| 3DShapeNets | 83.5% | 77% |

| the proposed method | 92.84% | 90.92% |

Proposed Method

In the proposed method the CNN is used as a feature extractor and the SVM is applied as a classifier.

Feature Extractor:

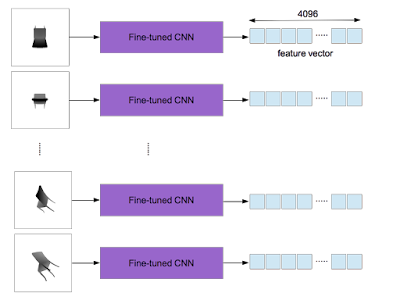

The CNN model (bvlc_reference_caffenet.caffemodel) that Caffe provides is fine-tuned on the training set of ModelNet10 or the ModelNet40. The detailed information on the fine-tuning procedures is described in this page. After training, output of the layer "fc7" is used as a feature vector (4096 dimensions). As explained here, in the proposed method a 3D object yields 20 gray images which are converted from depth ones. The fine-tuned CNN used as the feature extractor is applied to each of them and 20 feature vectors are obtained per 3D object.

According to the work by H. Su et al., element-wise maximum value across the 20 vectors is taken to make a single feature vector with 4096 dimensions as shown below.

Classifier:

Since one feature vector per 3D object is made, it is straightforward to perform a classification by the SVM. In the current work the library LIBLINEAR in which linear SVMs are implemented is used. For the ModelNet10 training set the following command is run to train the SVM, and for the ModelNet40 training set the command is as follows, It is worthwhile to note that in order to improve the accuracy, square root of all elements of the vector is computed before applying the linear SVM. In other words, a Hellinger kernel classifier is employed. After training, the prediction command is run for the testing sets of both the ModelNet10 and the ModelNet40 as, The programs "train" and "predict" are offered by LIBLINEAR.

References

- MVCNN: Hang Su, Subhransu Maji, Evangelos Kalogerakis, and Erik Learned-Miller, Multi-view Convolutional Neural Networks for 3D Shape Recognition

- VoxNet: D. Maturana and S. Scherer. VoxNet: A 3D Convolutional Neural Network for Real-Time Object Recognition. IROS2015.

- DeepPano: B Shi, S Bai, Z Zhou, X Bai. DeepPano: Deep Panoramic Representation for 3-D Shape Recognition. Signal Processing Letters 2015.

- 3DShapeNets: Z. Wu, S. Song, A. Khosla, F. Yu, L. Zhang, X. Tang and J. Xiao. 3D ShapeNets: A Deep Representation for Volumetric Shapes. CVPR2015.

0 件のコメント:

コメントを投稿